Motivation

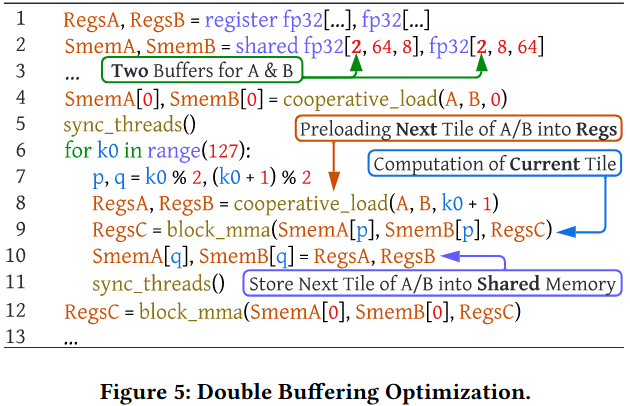

Limited Optimization Support: 现有的循环导向的调度原语无法实现double_buffer、thread block swizzle、efficient usage of Tensor Core MMA PTX instruction、multi-stage asynchronous prefetching

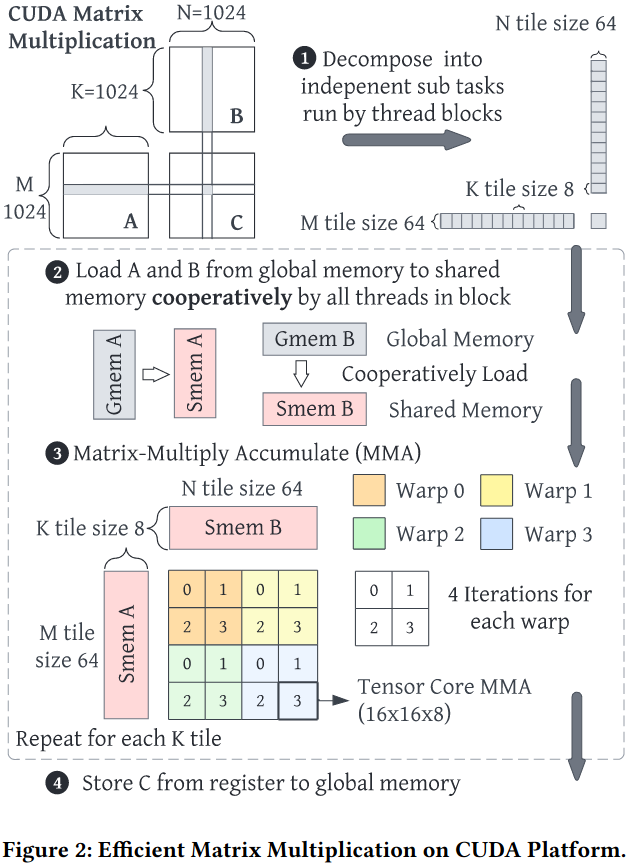

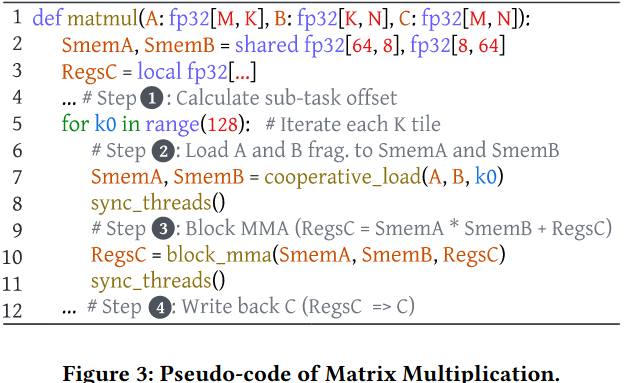

上面两张图解决的性能瓶颈是同一线程块中的所有线程可能被同一类型的硬件资源阻塞

Dedicated Schedule Template for Fusion

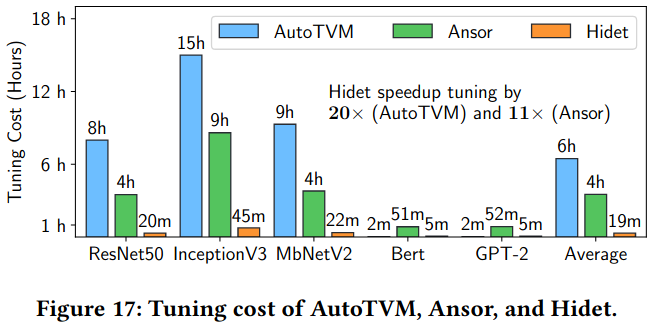

Long Tuning Time

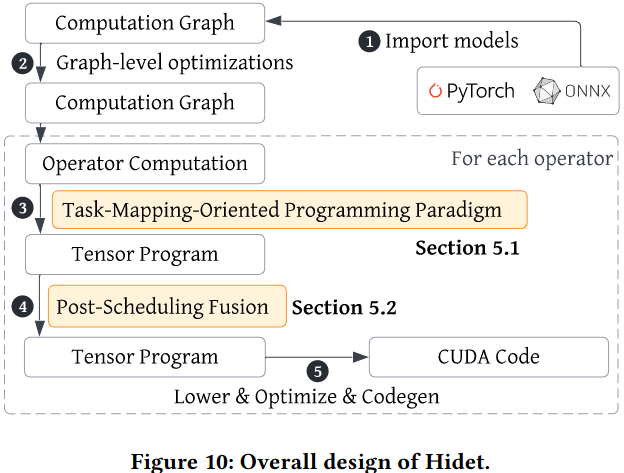

Hidet: System Design

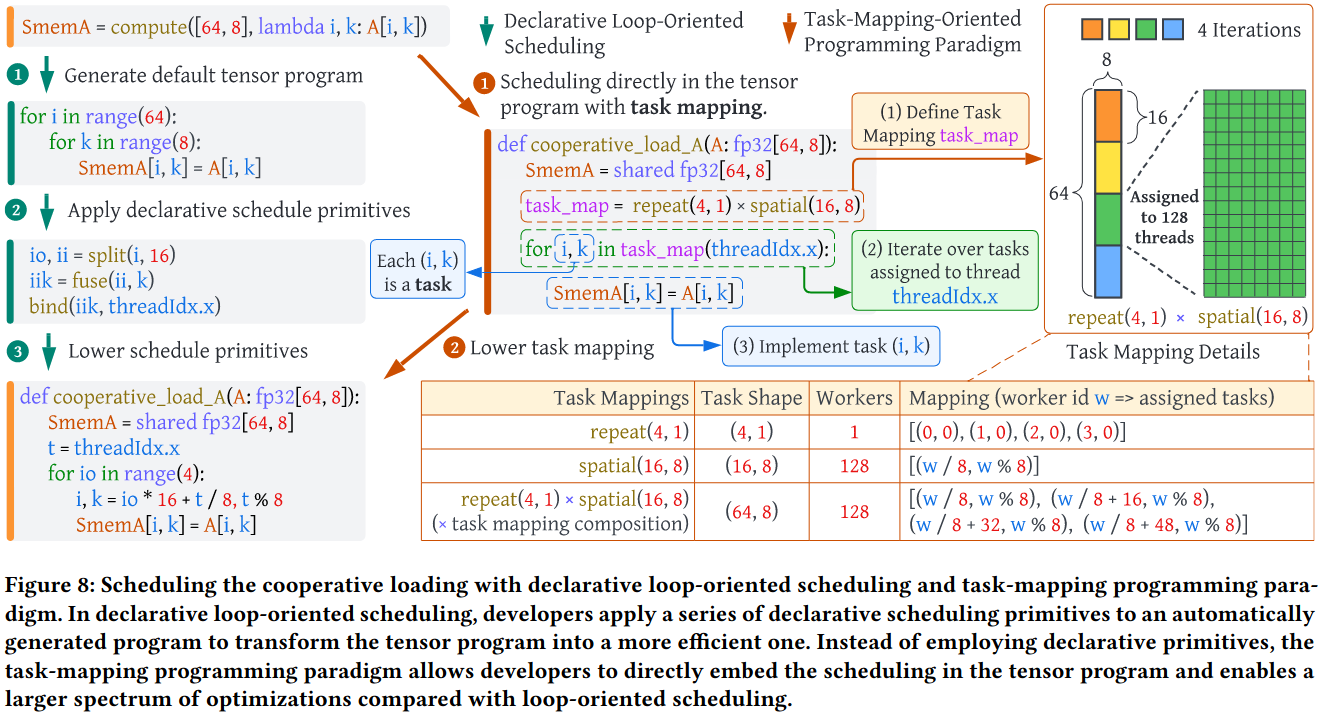

Task-Mapping Programming Paradigm

Task Mappping

- repeat(d1, …, dm): 将一个网格任务(d1, …, dm)映射到单个worker, 一个worker负责执行所有任务

- spatial(d1, …, dm): 将网格任务(d1, …, dm)映射到相同数量的worker, 每个worker只处理一个任务

Task Mapping Composition

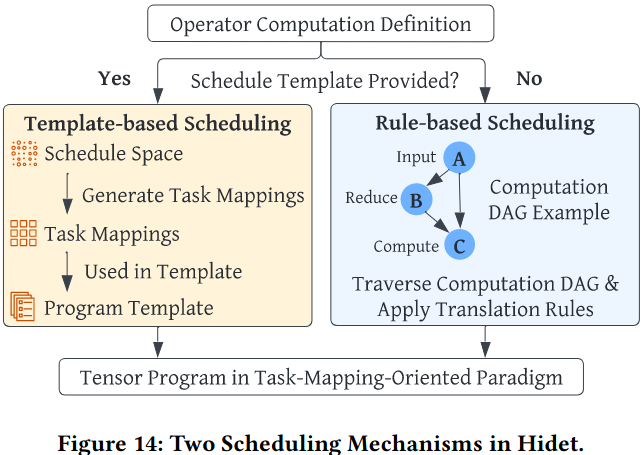

Scheduling Mechanism

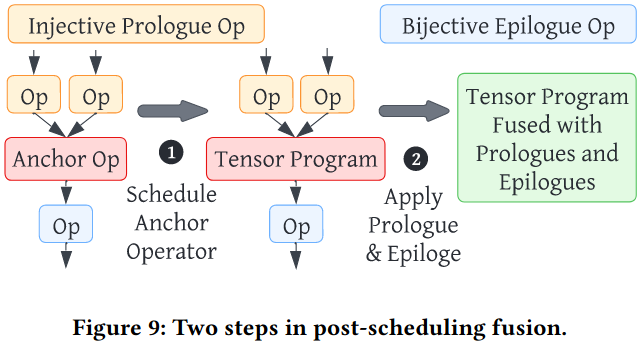

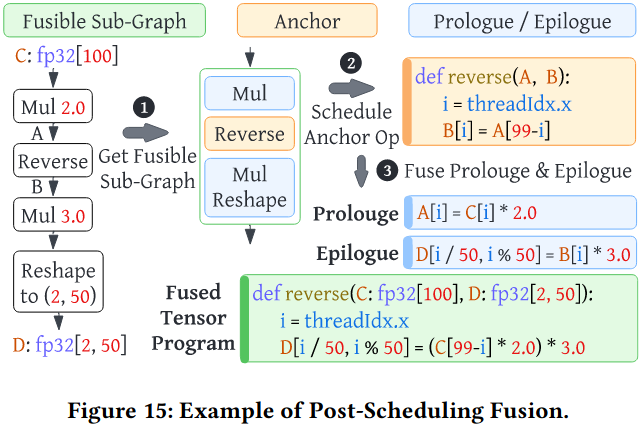

Post-Scheduling Fusion

step 1: 只调度锚点算子(anchor operator)

step 2: 自动将周围的算子融合到已调度好的锚点算子程序中

前序算子: 在锚点算子之前的可融合算子; 要求是injective operator,不能有reduction computation

后序算子: 在锚点算子之后的可融合算子; 要求是bijective operator,输入张量的每个元素只能贡献到输出张量的一个元素

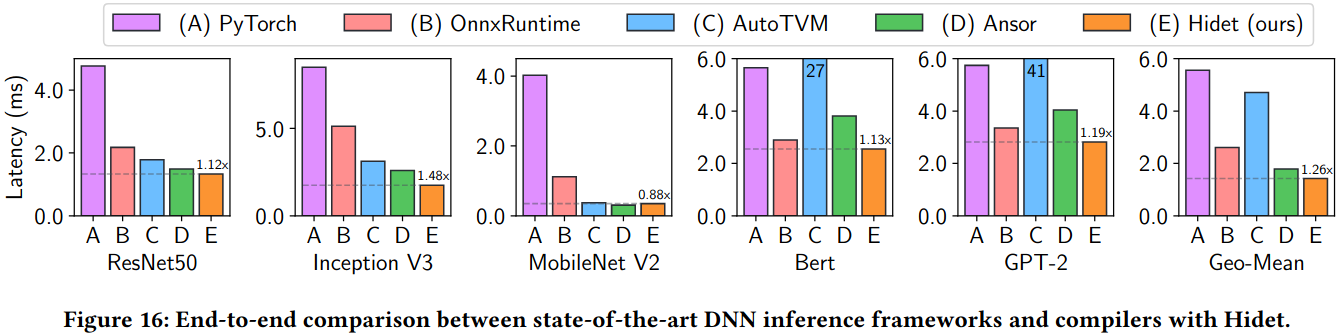

Evaluation

Reference

Hidet Task-Mapping Programming Paradigm for Deep Learning Tensor Programs

FEATURED TAGS

Genetic Algorithm

Multi-objective Optimization

Instruction-Level Parallelism(ILP)

Compiler

Deep Learning Accelerators

Tensor Compiler

Compiler Optimization

Code Generation

Heterogeneous Systems

Operator Fusion

Deep Neural Network

Recursive Tensor Execution

Deep Learning

Classical Machine Learning

Compiler Optimizations

Bayesian Optimization

Autotuning

Spatial Accelerators

Tensor Computations

Code Reproduction

Neural Processing Units

Polyhedral Model

Auto-tuning

Machine Learning Compiler

Neural Network

Program Transformations

Tensor Programs

Deep learning

Tensor Program Optimizer

Search Algorithm

Compiler Infrastructure

Scalalbe and Modular Compiler Systems

Tensor Computation

GPU Task Scheduling

GPU Streams

Tensor Expression Language

Automated Program optimization Framework

AI compiler

memory hierarchy

data locality

tiling fusion

polyhedral model

scheduling

domain-specific architectures

memory intensive

TVM

Sparse Tensor Algebra

Sparse Iteration Spaces

Optimizing Transformations

Tensor Operations

Machine Learning

Model Scoring

AI Compiler

Memory-Intensive Computation

Fusion

Neural Networks

Dataflow

Domain specific Language

Programmable Domain-specific Acclerators

Mapping Space Search

Gradient-based Search

Deep Learning Systems

Systems for Machine Learning

Programming Models

Compilation

Design Space Exploration

Tile Size Optimization

Performance Modeling

High-Performance Tensor Program

Tensor Language Model

Tensor Expression

GPU

Loop Transformations

Vectorization and Parallelization

Hierarchical Classifier

TVM API

Optimizing Compilers

Halide

Pytorch

Optimizing Tensor Programs

Gradient Descent

debug

Automatic Tensor Program Tuning

Operators Fusion

Tensor Program

Cost Model

Weekly Schedule

Spatio-temporal Schedule

tensor compilers

auto-tuning

tensor program optimization

compute schedules

Tensor Compilers

Data Processing Pipeline

Mobile Devices

Layout Transformations

Transformer

Design space exploration

GPU kernel optimization

Compilers

Group Tuning Technique

Tensor Processing Unit

Hardware-software Codeisgn

Data Analysis

Adaptive Systems

Program Auto-tuning

python api

Code Optimization

Distributed Systems

High Performance Computing

code generation

compiler optimization

tensor computation

Instructions Integration

Code rewriting

Tensor Computing

DSL

CodeReproduction

Deep Learning Compiler

Loop Program Analysis

Nested Data Parallelism

Loop Fusion

C++

Machine Learning System

Decision Forest

Optimizfing Compiler

Decision Tree Ensemble

Decision Tree Inference

Parallelization

Optimizing Compiler

decision trees

random forest

machine learning

parallel processing

multithreading

Tree Structure

Performance Model

Code generation

Compiler optimization

Tensor computation

accelerator

neural networks

optimizing compilers

autotuning

performance models

deep neural networks

compilers

auto-scheduling

tensor programs

Tile size optimization

Performance modeling

Program Functionalization

affine transformations

loop optimization

Performance Optimization

Subgraph Similarity

deep learning compiler

Intra- and Inter-Operator Parallelisms

ILP

tile-size

operator fusion

cost model

graph partition

zero-shot tuning

tensor program

kernel orchestration

machine learning compiler

Loop tiling

Locality

Polyhedral compilation

Optimizing Transformation

Sparse Tensors

Asymptotic Analysis

Automatic Scheduling

Data Movement

Optimization

Operation Fusion

Compute-Intensive

Automatic Exploration

data reuse

deep reuse

Tensorize

docker

graph substitution

compiler

Just-in-time compiler

graph

Tensor program

construction tensor compilation

graph traversal

Markov analysis

Deep Learning Compilation

Tensor Program Auto-Tuning

Decision Tree

Search-based code generation

Domain specific lanuages

Parallel architectures

Dynamic neural network

mobile device

spatial accelerate

software mapping

reinforcement learning

Computation Graph

Graph Scheduling and Transformation

Graph-level Optimization

Operator-level Optimization

Partitioning Algorithms

IR Design

Parallel programming languages

Software performance

Digitial signal processing

Retargetable compilers

Equational logic and rewriting

Tensor-level Memory Management

Code Generation and Optimizations

Scheduling

Sparse Tensor

Auto-Scheduling

Tensor

Coarse-Grained Reconfigurable Architecture

Graph Neural Network

Reinforcement Learning

Auto-Tuning

Domain-Specific Accelerator

Deep learning compiler

Long context

Memory optimization

code analysis

transformer

architecture-mapping

DRAM-PIM

LLM