Background

编译器优化专注于重组底层张量计算以利用硬件特性,但受限于必须保持计算正确性

NAS则利用神经网络的鲁棒性,通过变换网络架构(如分组卷积、瓶颈层)来优化性能

Our Approach

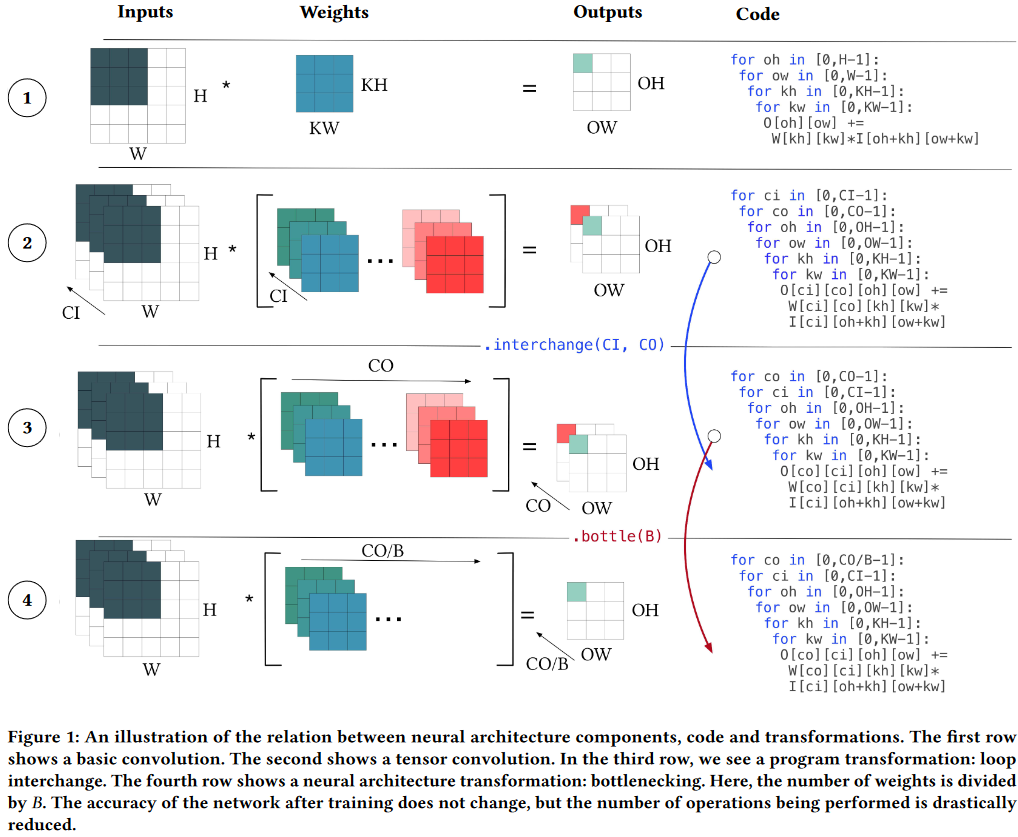

将NAS中的网络架构操作重新表达为程序转换,使其可以与现有编译器转换结合

Overview

Code Transformation:不影响最终计算的值,只改变内存访问模式,如interchange Model Transformation:改变了权重张量的大小和外层循环的范围,如Bottlenecking

Neural Architecture Search

基本原理

- 从整体网络骨架开始,尝试设计可以插入不同位置的单元

- 每个单元被描述为一个DAG,节点是中间特征图,边表示可能的操作

- 目标是找到最佳的DAG结构,插入骨架并在给定数据集上训练

传统的NAS从预先定义的操作来进行组合,本文提出从程序转换来生成新的操作,以来发现预定义列表中没有的新操作类型

Unified space

Extending the Polyhedral Model

- Bottlenecking

- Grouping

- Depthwise

Fisher Potential as a Legality Check

- 传统程序转换必须保证语义等价,但神经网络允许一定的变形而不影响功能

- Fisher Potential提供了一个无需训练就能评估转换合法性的方法

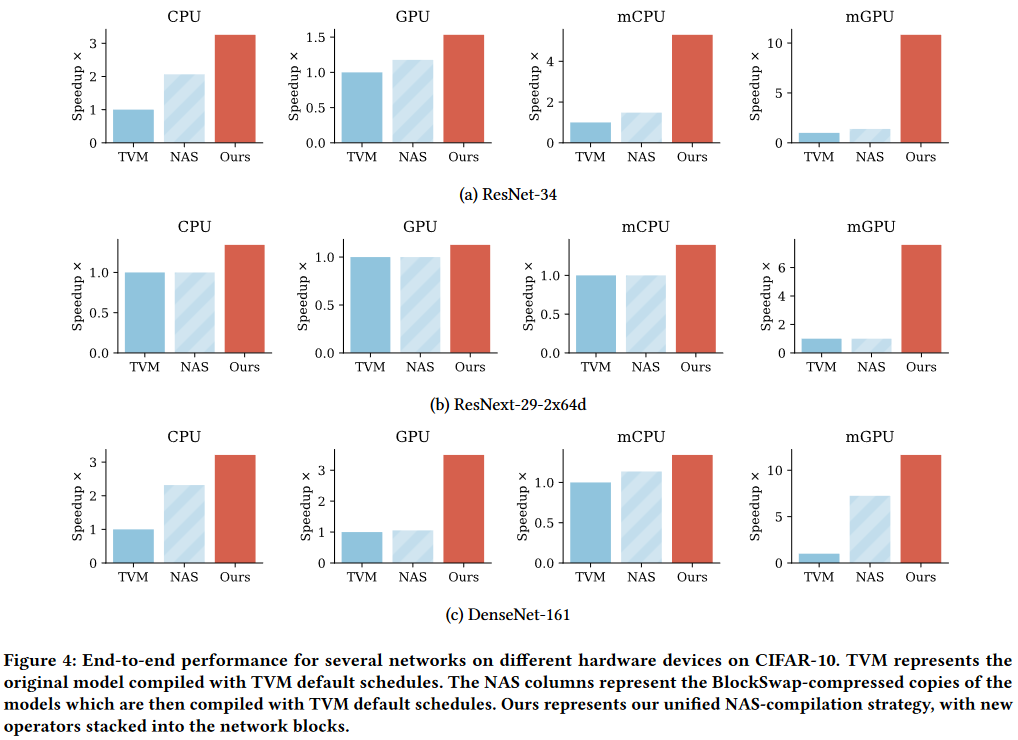

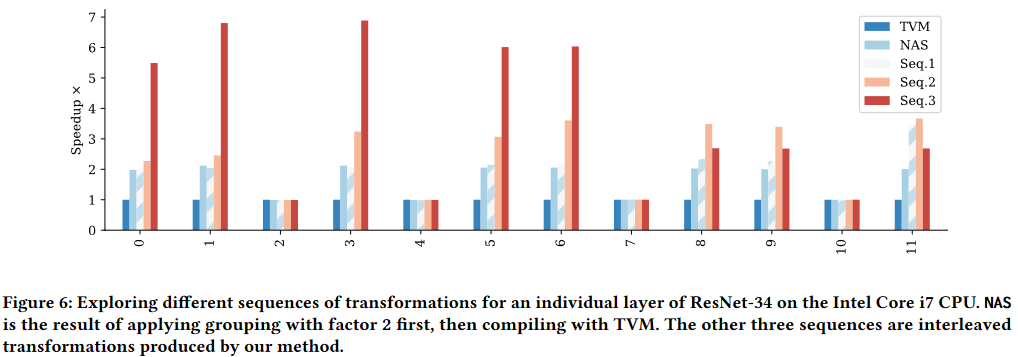

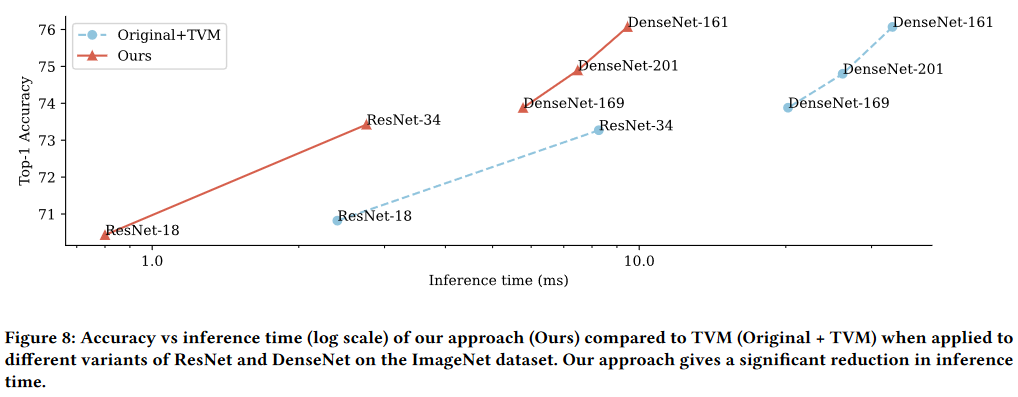

Evaluation

Thinking

(1) 本文主要针对是单个卷积层的优化,转换操作都是在层级别进行,没有考虑层与层之间的相互影响,缺乏对整个网络架构的全局视角

(2) 可能带来的问题局部最优不一定是全局最优,可能错过跨层优化机会,如skip connection、Feature Reuse

(3) 可能改进的方向构建计算图依赖关系,分析数据流模式,识别关键瓶颈;多层联合优化,考虑层间依赖,优化数据传输,平衡计算资源

(4) 本文针对cnn架构,对于transformer架构难以扩展

Reference

Neural Architecture Search as Program Transformation Exploration

FEATURED TAGS

Genetic Algorithm

Multi-objective Optimization

Instruction-Level Parallelism(ILP)

Compiler

Deep Learning Accelerators

Tensor Compiler

Compiler Optimization

Code Generation

Heterogeneous Systems

Operator Fusion

Deep Neural Network

Recursive Tensor Execution

Deep Learning

Classical Machine Learning

Compiler Optimizations

Bayesian Optimization

Autotuning

Spatial Accelerators

Tensor Computations

Code Reproduction

Neural Processing Units

Polyhedral Model

Auto-tuning

Machine Learning Compiler

Neural Network

Program Transformations

Tensor Programs

Deep learning

Tensor Program Optimizer

Search Algorithm

Compiler Infrastructure

Scalalbe and Modular Compiler Systems

Tensor Computation

GPU Task Scheduling

GPU Streams

Tensor Expression Language

Automated Program optimization Framework

AI compiler

memory hierarchy

data locality

tiling fusion

polyhedral model

scheduling

domain-specific architectures

memory intensive

TVM

Sparse Tensor Algebra

Sparse Iteration Spaces

Optimizing Transformations

Tensor Operations

Machine Learning

Model Scoring

AI Compiler

Memory-Intensive Computation

Fusion

Neural Networks

Dataflow

Domain specific Language

Programmable Domain-specific Acclerators

Mapping Space Search

Gradient-based Search

Deep Learning Systems

Systems for Machine Learning

Programming Models

Compilation

Design Space Exploration

Tile Size Optimization

Performance Modeling

High-Performance Tensor Program

Tensor Language Model

Tensor Expression

GPU

Loop Transformations

Vectorization and Parallelization

Hierarchical Classifier

TVM API

Optimizing Compilers

Halide

Pytorch

Optimizing Tensor Programs

Gradient Descent

debug

Automatic Tensor Program Tuning

Operators Fusion

Tensor Program

Cost Model

Weekly Schedule

Spatio-temporal Schedule

tensor compilers

auto-tuning

tensor program optimization

compute schedules

Tensor Compilers

Data Processing Pipeline

Mobile Devices

Layout Transformations

Transformer

Design space exploration

GPU kernel optimization

Compilers

Group Tuning Technique

Tensor Processing Unit

Hardware-software Codeisgn

Data Analysis

Adaptive Systems

Program Auto-tuning

python api

Code Optimization

Distributed Systems

High Performance Computing

code generation

compiler optimization

tensor computation

Instructions Integration

Code rewriting

Tensor Computing

DSL

CodeReproduction

Deep Learning Compiler

Loop Program Analysis

Nested Data Parallelism

Loop Fusion

C++

Machine Learning System

Decision Forest

Optimizfing Compiler

Decision Tree Ensemble

Decision Tree Inference

Parallelization

Optimizing Compiler

decision trees

random forest

machine learning

parallel processing

multithreading

Tree Structure

Performance Model

Code generation

Compiler optimization

Tensor computation

accelerator

neural networks

optimizing compilers

autotuning

performance models

deep neural networks

compilers

auto-scheduling

tensor programs

Tile size optimization

Performance modeling

Program Functionalization

affine transformations

loop optimization

Performance Optimization

Subgraph Similarity

deep learning compiler

Intra- and Inter-Operator Parallelisms

ILP

tile-size

operator fusion

cost model

graph partition

zero-shot tuning

tensor program

kernel orchestration

machine learning compiler

Loop tiling

Locality

Polyhedral compilation

Optimizing Transformation

Sparse Tensors

Asymptotic Analysis

Automatic Scheduling

Data Movement

Optimization

Operation Fusion

Compute-Intensive

Automatic Exploration

data reuse

deep reuse

Tensorize

docker

graph substitution

compiler

Just-in-time compiler

graph

Tensor program

construction tensor compilation

graph traversal

Markov analysis

Deep Learning Compilation

Tensor Program Auto-Tuning

Decision Tree

Search-based code generation

Domain specific lanuages

Parallel architectures

Dynamic neural network

mobile device

spatial accelerate

software mapping

reinforcement learning

Computation Graph

Graph Scheduling and Transformation

Graph-level Optimization

Operator-level Optimization

Partitioning Algorithms

IR Design

Parallel programming languages

Software performance

Digitial signal processing

Retargetable compilers

Equational logic and rewriting

Tensor-level Memory Management

Code Generation and Optimizations

Scheduling

Sparse Tensor

Auto-Scheduling

Tensor

Coarse-Grained Reconfigurable Architecture

Graph Neural Network

Reinforcement Learning

Auto-Tuning

Domain-Specific Accelerator

Deep learning compiler

Long context

Memory optimization

code analysis

transformer

architecture-mapping

DRAM-PIM

LLM